ParaLLEl RDP this year has singlehandedly caused a breakthrough in N64 emulation. For the first time, the very CPU-intensive accurate Angrylion renderer was lifted from CPU to GPU thanks to the powerful low-level graphics API Vulkan. This combined with a dynarec-powered RSP plugin has made low-level N64 emulation finally possible for the masses at great speeds on modest hardware configurations.

ParaLLEl RDP will be coming to Mupen64Plus Next soon

ParaLLEl RDP has first seen its debut in ParaLLEl N64, but it will soon make its way into the upcoming new version of Mupen64Plus Next too. Expect increased compatibility over ParaLLEl N64 (especially on Android) and potentially better performance in many games.

ParaLLEl RDP Upscaling

But that’s not what this article is going to be dedicated to. It quickly became apparent after launching ParaLLEl RDP that users have grown accustomed to seeing upscaled N64 graphics over the past 20 years. So something rendering at native resolution, while obviously accurate, bit-exact and all, was seen as unpalatable to them. Many users indicated over the past few weeks that upscaling was desired.

Well, you won’t have to wait too long, and as a demonstration, today we premiere a 11-minute long YouTube video showcasing ParaLLEl RDP running at 4 times the native resolution. Given an input resolution of 256×224, that means the game is rendering internally at 1024×896.

Now, here comes the good stuff with LLE RDP emulation. Unlike so many HLE renderers, ParaLLEl RDP fully emulates the RCP’s VI Interface. As part of this interface’s postprocessing routines, it automatically applies the equivalent of 8x MSAA (Multi-Sampled Anti-Aliasing) to the image. This means that even though our internal resolution might be 1024×896, this will then be further smoothed out by this aggressive multisampling postprocessing step.

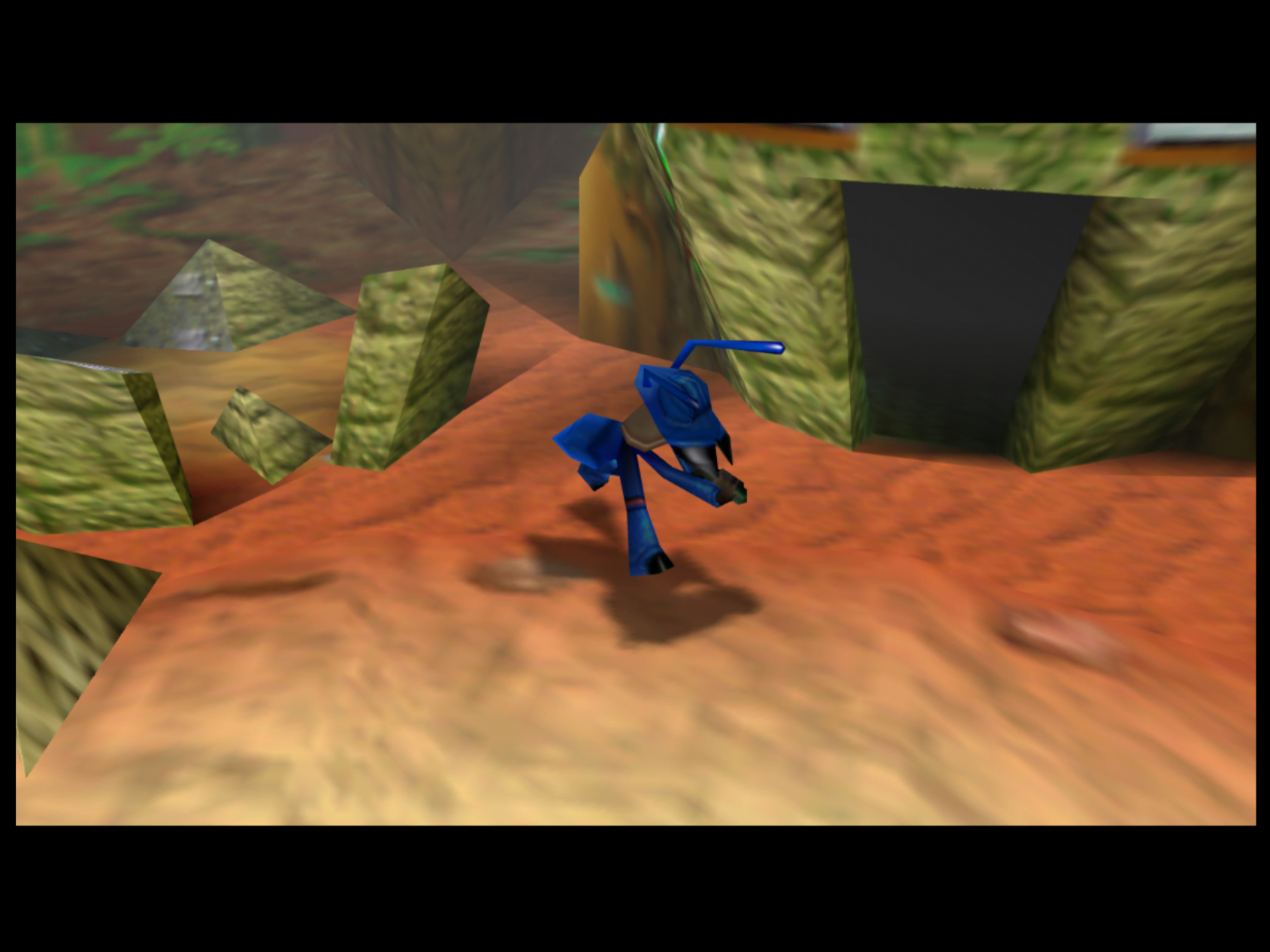

This results in even games that run at just 2x native resolution looking significantly better than the same resolution running on an HLE RDP renderer. Look for instance at this Mario 64 screenshot here with the game running at 2x internal upscale (512×448).

Screenshots

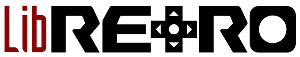

The screenshots below here show ParaLLEl RDP running at its maximum internal input resolution, 8x the original native image. This means that when your game is running at say 256×224, it would be running at 2048×1792. But if your game is running at say 640×480 (some interlaced games actually set the resolution that high, Indiana Jones IIRC correctly), then we’d be looking at 5120×3840. That’s bigger than 4K! Then bear in mind that on top of that you’re going to get the VI’s 8x MSAA on top of that, and you can probably begin to imagine just how demanding this is on your GPU given that it’s trying to run a custom software rasterizer on hardware. Suffice it to say, the demands for 2x and 4x will probably not be too steep, but if you’re thinking of using 8x, you better bring some serious GPU horsepower. You’ll need at least 5-6GB of VRAM for 8x internal resolution for starters.

Anyway, without much further ado, here are some glorious screenshots. GoldenEye 007 now looks dangerously close to the upscaled bullshot images on the back of the boxart!

So where is it?

No ETAs, but it’s coming to you soon and will be available on RetroArch shortly for Windows, Linux and Android platforms. Stay tuned!