The PlayStation2 is a system designed almost entirely from the ground up for use with CRT TVs. Like any other game console built around analog video output, it is not designed around pixels or resolution, but scanlines and timing. Yes, there is a way to attach a VGA monitor for the official PS2 Linux toolkit so there are some VESA display modes as well, but this is more of an afterthought, and almost no real commercial game used this.

Acronyms used in this article: PS2 (PlayStation2), CRT (Cathode Ray Tube, old TVs that were replaced by HDTVs), GS (Graphics Synthesizer, the PS2’s GPU), PAL (European television signal for CRT TVs), NTSC (American television signal for CRT TVs in America/Japan), CRTC (Cathode Ray Tube Controller, the PS2 GPU has this), EDTV (Enhanced Definition TV or Extended Definition TV, an SDTV that supported progressive scan display modes), VU0/VU1 (Vector Unit 0/Vector Unit 1, two SIMD coprocessors of the PS2)

Sticking locked to 60fps to avoid bad image quality

The PlayStation2’s 4MB embedded VRAM for the Graphics Synthesizer (its GPU) was usually not big enough to hold a full 640×480 framebuffer (or something even bigger). Sony would insist developers not think of it as VRAM but rather a scratchpad, but there’s still only so much you can do with 4MB for render targets.

However, the bandwidth for the GS was unmatched, things like alpha blending, multipasses and framebuffer copies which are expensive on most other GPUs was nearly free on the PS2. In fact, many games like Driv3r abuse some of the GS’ strengths in ways that would bring any other GPU down to its knees. Thanks to its fully programmable geometry pipeline because of the two vector units (VU0 and VU1), the PS2 had hardware features like mesh shaders that we have only started to see being introduced on hardware like the Geforce RTX 20 series almost 18 years later. But we digress.

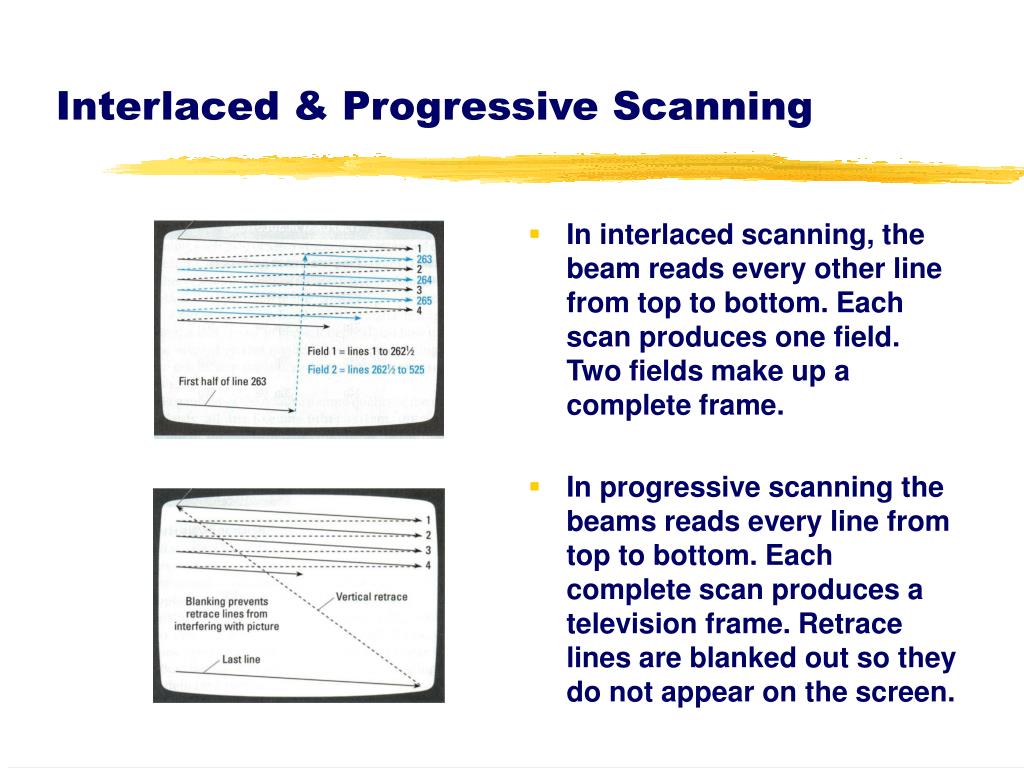

Developers were almost incentivized by the hardware to make sure their games maintained a solid 60Hz/60fps on NTSC (or 50Hz/50fps on PAL). How did Sony do this? It was perhaps not an intentional initiative but the end result was the same. For one, early versions of the SDK only supported interlaced scanline modes which required 60Hz to get 640×448. Later on, game developers had the option of either using frame mode (full frames) or field rendered mode (interlaced frames).

Field rendering being interlaced means the memory requirements per frame could be halved because the frame is output at 640×240 or even as low as 512×224. This was important because like we said before, the PS2’s GPU only had 4MB of embedded DRAM to work with, and you had to store your framebuffers there. A further benefit is the time needed to render the final output image is also reduced. So to many developers it seemed like field rendering mode was the way to go if you wanted to make a fast performing game on the PS2. So where is the catch? Here comes the big caveat and why PS2 games rarely skip a beat and try to ensure a perfectly locked 60fps. If you miss a frame and the previous one has to be displayed twice, you will see the whole image shift position Y by 1 line. It was therefore imperative to ensure this did NOT happen. So what most games would try to do is instead internally slow down the game by skipping frames when the game was in danger of not maintaining its 60fps target (like SSX 3) but continue to aim at a 60Hz/60fps target.

The other option was frame mode, rendering full frames. Render times obviously go up compared to field rendered mode because you’re rendering full fat frames (640×448 or 512×448), and therefore it might be harder to hit a consistent 60fps vs. field rendering mode. However, the system is more forgiving when you don’t render a new frame in time. The screen would just end up showing the second field from the previous frame.

So, to recap, if the game could maintain a consistently frame paced 60fps for a game, field rendering mode (i.e. interlaced mode) would look as expected, your CRT would blend the half frames and make it look like a full frame. The average enduser would be none the wiser about all the internal dealings of how a CRT assembles and finally outputs a picture, and you have the big advantage that this mode is faster than frame mode and therefore it’s easier to aim for a high framerate like 60fps. If the frame rate would be all over the place, it would lead to a bad picture like discussed in the earlier paragraphs, therefore it was on the developer to make sure you either slow the game internally down or you make sure it runs at a rock solid framerate.

Field rendering being a viable and fast option like this for PS2 as long as you could ensure 60fps/60Hz meant CRT saved the day and PS2 could get away with its generally unimpressive display resolutions.

It has not gone unnoticed by many that PlayStation2 has by far the largest amount of 60fps/60Hz games available (especially on launch day). Turns out there was a technical reason behind this that forced developer’s hand as much as it was developers really wanting to push themselves to the limit.

So if you ever wondered why PS2 games seem to so rarely miss their frametime targets while framerates jump all over the place on other consoles (even Xbox OG and GameCube, its two competitors from that generation), now you know.

We remember at the time PS2 launch games drawing heavy criticism at launch for jagged edges ( colloquially referred to as ‘jaggies’) and the lack of anti-aliasing, especially compared to the Dreamcast. What compounded this problem was that many game magazines and journalists could only do single frame capture. So when they took screenshots or pictures, they would only get half the fields in their pictures. So you’d get bad screenshots in magazines with only the odd or even lines being shown, leading to PS2 games looking much more jaggy in print than they actually did on a real screen. In reality the problem was not so bad, but the lower output resolution to fit in GS eDRAM probably did not help with the misunderstandings and misconceptions.

Widescreen, CRTs and the PS2

Let’s discuss widescreen display modes now and their use on CRT TVs. While a few PlayStation1 games had been adventurous and started implementing widescreen modes, it could be safely assumed that up until now the vast majority of console games were all designed around 4:3 aspect ratios. However, this was soon about to change, thanks in no small part to the PS2. The PS2 doubled as a DVD player and the term ‘anamorphic widescreen’ started being thrown around a lot. Widescreen 16:9 CRT TVs started becoming more mainstream around the early to mid ‘00s. Most games made for PS2 were still designed around a 4:3 aspect ratio of course, but gradually more and more started offering built-in widescreen modes as demand grew. There are 3 general ways the PS2 can go about displaying a widescreen picture (or in general really):

- Hor+ (Hor Plus) – Extends the horizontal sides of the screen to fill out the screen. Usually the best approach (and almost never used on the real PS2, which is why we need all these widescreen patches)

- Vert- (Vert Minus) – Chops off some of the top and bottom sides of the screen while zooming in on the center

- Hor+ and Vert- (Hor Plus + Vert Minus) – A bit of both

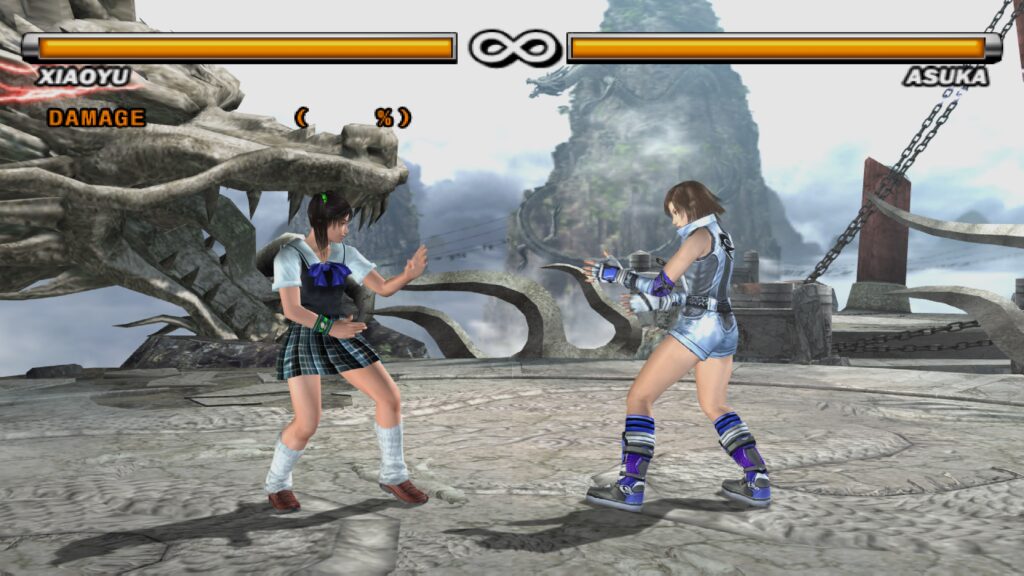

The vast majority of PS2 games unsurprisingly opt for the ‘worst’ of these 3 options when implementing a widescreen mode, Vert-. In some rare cases you will see the 3rd option (Hor+ and Vert-) used as well. Games like Tekken 5 therefore have a ‘quasi-widescreen’ mode, where you lose parts of the screen at the top and bottom that are considered unimportant to the gameplay but then it zooms in slightly in the center to make it fit within a 16:9 aspect ratio. All Ratchet & Clank and Jak and Daxter games are Vert- as well.

It was of course possible to do a Hor+ widescreen game on a PS2, but the developer had to consider system resources, GS embedded VRAM usage, etc. Zooming/scaling was free on the GS and chopping off some parts of the image prob made sure things still fit within the 4MB GS VRAM, so Vert- was probably the easiest solution. With Hor+ also the horizontal resolution comes into play. The more you extend the horizontal sides of the screen, the higher your horizontal resolution needs to be to still maintain a good picture quality. And the PS2 already relied on the CRT’s ability to make interleaved scanlines look really good to get away with lower than average resolutions.

Below you see Tekken 5’s built-in ‘widescreen’ mode. This is how it would look like on a real PS2 with a widescreen CRT TV. As you can see, this shows all the signs of a Vert- aspect ratio. Parts of the top and bottom are cropped while the image is zoomed in on the center. The two characters appear much larger than they would in 4:3 mode. Widescreen on 6th generation consoles was often an exercise in frustration for the enthusiast. So many games would simply give you Vert- and not really take advantage of the extra screen estate.

Below you will see the ‘correct’ widescreen mode, this is an internal widescreen patch in the LRPS2 core. It corrects the widescreen mode to Hor+. Now more of the game world is being rendered onscreen because the image is extended horizontally, no cropping is being done.

Progressive scan

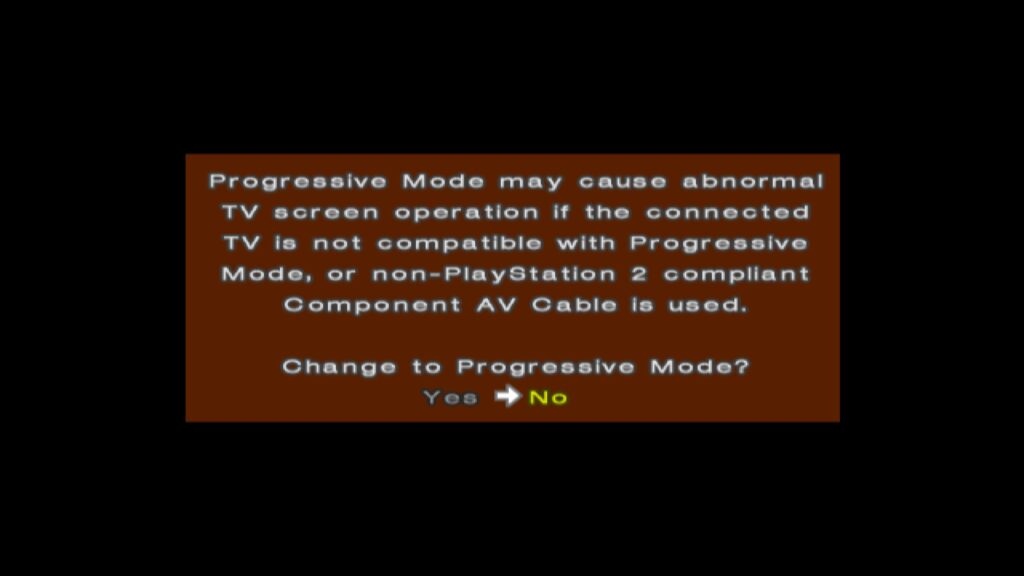

The PS2 released near the tail end of the CRT TV’s life. HD-ready LCD TVs would not start arriving until 2005 and so TV makers were still trying to push the CRT forward in ways they could. As we have established before, CRT TVs are analog video. To make the move to digital television (DTV) on a CRT, new specifications such as EDTV were necessary. EDTV stood for Enhanced-definition television or Extended Definition Television. Basically, in practice it meant that SDTVs were being made that could support 480p and 576p-line signals in progressive scan. Around 2001, progressive scan-capable CRT TVs started being sold, and games started taking advantage of this.

You needed either a component cable (on NTSC TVs) or RGB SCART cables (Japanese and European TVs) in order to be able to use progressive scan display modes. Composite and RF-AV cables did not support this feature.

By pressing and holding X and Triangle at startup, a progressive scan-supported game would ask the user to choose between normal and progressive scan mode. Progressive scan modes are non-interlaced full frame modes, therefore there would be none of the interlacing artefacts and you’d get full-height backbuffers.

The only disadvantage some of these progressive scan-capable games usually have is that sometimes they reduce the framebuffer depth to 16bpp or lower so that everything can still fit inside the Graphics Synthesizer’s 4MB embedded eDRAM. This is not a general rule but some games do make that tradeoff, so you’re trading no interlacing artefacts for a somewhat less high quality final output image (I.e. more color banding).

On average though, progressive scan would still look better than interlaced mode to most people. Some games like Valkyrie Profile 2 and Gran Turismo 4 would even offer 1080i progressive scan modes. Note that this is a bit deceptive. This mode doesn’t actually make the PS2 output at a full 1920×1080 resolution, it’s just a bit of advanced framebuffer shenanigans to make it ‘look’ higher res than it actually is. For these games, 480p progressive scan mode usually looks better on modern displays.

Let’s for instance analyze Gran Turismo 4’s ‘1080i’ mode. The internal render resolution is actually 640 x 540. The GS’ CRTC then magnifies this to ‘1920×1080’. 640 is ‘magnified’ to 1920 via a Magnification Integer (MAGH) of ‘3’. So 640 * 3 = 1.920. The vertical resolution 540 meanwhile is magnified either by a Magnification Integer (MAGV) of ‘2’ (540 x 2 = 1080), or the interlaced framebuffer switch. So it’s basically a bit of GS CRTC zoom scaling going on. On a CRT this probably looked convincing enough at the time.

Europe and progressive scan (or the lack thereof)

Note that for European versions of the game, progressive scan modes would sometimes be stripped out or removed (God of War 2, Soul Calibur 3). This was probably because of the low adoption rates of progressive scan-capable TVs.

Europe and PAL CRTs (50Hz vs 60Hz)

In fact Europeans had plenty of other issues on top. For those that don’t know, in Europe they used PAL signal CRT TVs while in Japan and the United States, NTSC signal CRT TVs were the standard. PAL ran at 50Hz while NTSC ran at 60Hz.

When PS2 launched in Europe, most Dreamcast games already offered the choice between a PAL 50Hz mode and a PAL60 mode. PAL60 (on TVs that supported it) would give a 60Hz image, thereby avoiding the 16.9% reduction in frame rate for many PAL conversions and the additional letterboxes. The added letterboxing was because PAL typically had a higher output resolution than NTSC. However, games most of the time would not bother taking advantage of this as they were either already nearing the ceiling on system resources or simply because they didn’t care about the European/PAL market enough to cater to them.

The situation on PS2 was more complicated. PAL60 was not a real standard so Sony refused to back it. This meant that most PS2 launch games (prob all of them) sadly did not have any 60Hz options, so we were stuck with 50Hz for a while.

Developers that were usually good about providing PAL-optimized games were UK developers like Psygnosis (Wipeout, Destruction Derby), Core Design (Tomb Raider) and Rockstar/DMA Design (Grand Theft Auto). You’d get a 50Hz mode with more scan lines being rendered than the NTSC version. So you’d get better image quality than the NTSC version. However, the game would still run slower because of 50Hz. Some developers would compensate for this by tweaking the game speed, but regardless in general it would almost usually always be worse than the same game being ran at 60Hz.

No PAL60 for PS2, only NTSC for you

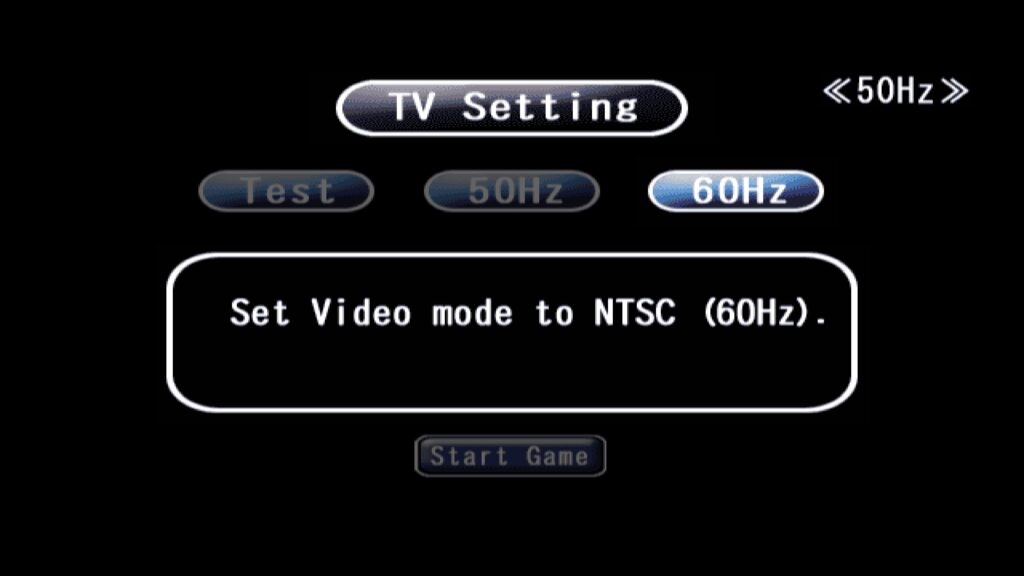

Anyway, around 2002 more and more games started having options at startup allowing you to choose between a 50Hz and 60Hz mode, like ICO. How the PS2 dealt with this compared to the Dreamcast was that instead of allowing you to choose between PAL and PAL60 modes, the game would try to switch to NTSC 480i mode instead. Most televisions sold in Europe near the late ’90s actually supported both PAL and NTSC, so this wasn’t much of a compatibility issue. The games that still didn’t offer these PAL/NTSC selectors (like Silent Hill 2 and Metal Gear Solid 2) would put a bit more effort into their PAL conversions and not have the dreaded letterboxing. The 50Hz/60Hz mode was not an easy thing to crack for many developers. Developers like Square would complain about having to ship a 50Hz and 60Hz version of a FMV scene, and because their games were so front loaded with high-quality big FMV scenes, this was not really possible to fit on the DVD. This is the reason why games like Final Fantasy X sticked to 50Hz despite the developer being aware of the growing demand for NTSC 60Hz mode. Eventually the games that would not have these 50Hz/60Hz toggles would become the exception to the rule.

Moving to LCD/HDTVs in the mid ‘00s for the 7th generation

The industry-wide move from CRTs to LCD TVs was a rough one. This happened sometime around 2005 when new consoles were being prepared. The PS2 of course was still around and wouldn’t really die until like late 2007. There were definitely advantages for the upcoming 7th generation consoles, like the PlayStation3 and Xbox 360. Gone were the days of PAL vs. NTSC. Europeans would no longer have to worry if a game would implement a PAL60/60Hz mode. As long as you hook up your HDMI-capable game console to the HDMI-capable display, your game would output at 60Hz. And of course there was the promise of non-interlaced high resolutions by default. Not a lot of people ever had a progressive scan-capable TV, so this would be their first time seeing a 480p or 720p image on a television.

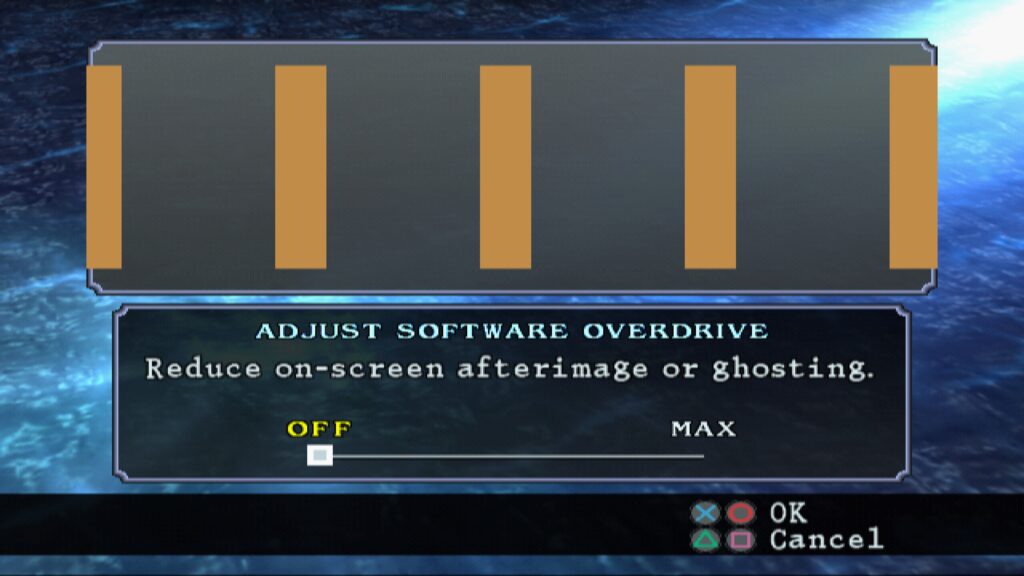

It would take time for people to catch on, but early LCD HD-ready TVs were often of very poor quality with high amounts of latency and ghosting. CRT-based game consoles (such as the PS2) looked especially poor on them. In fact, for the older sixth generation consoles, there were almost no advantages to be had using them on a non-CRT display. Feedback blur (used to great effect in many PlayStation2 games for motion blur effects) would look great on a CRT, but looked disastrous on these early LCD screens with the heavy ghosting they were known for. Some games like Soul Calibur 3 would have an in game setting (Software Overdrive) that would try to reduce the afterimage effects on LCD screens.

There was no fixing the latency though or the lack of motion clarity, and this problem would persist for decades. Motion clarity in fact is only now finally being addressed on modern displays for these consoles with the arrival of BlurBusters’ ‘CRT beam racing simulator’ (see our article here for more details). On a modern OLED screen in 202x we can finally enjoy near CRT-like latency with the motion clarity of a CRT (CRT beam simulator shader) AND the looks of a CRT (any advanced CRT shader).